Last week, I found out that my credit card information was stolen and used by criminals for an expensive shopping spree. This incident inspired me to revisit my previous post about drug dealing on the Darknet and research how stolen credit cards are traded there. I was able to gather a dataset of 42,497 stolen U.S. credit cards which are currently sold on a Darknet site. At the end of this post, you’ll find a link to a site that allows you to see if your card is in this dataset.

The Darknet, a part of the internet which is only accessible with special software like the Tor Browser, allows users and website owners to stay anonymous. The anonymity not only makes the Darknet an essential tool for people like reporters or activists, but is also used widely by criminals. Nowadays the Darknet hosts multiple Cryptomarkets, which are marketplaces similar to eBay where vendors can sell products like illicit drugs, counterfeit money, or stolen credit cards. I decided to take a close look at the AlphaBay Market because it’s one of the largest marketplaces and relatively easy to scrape.

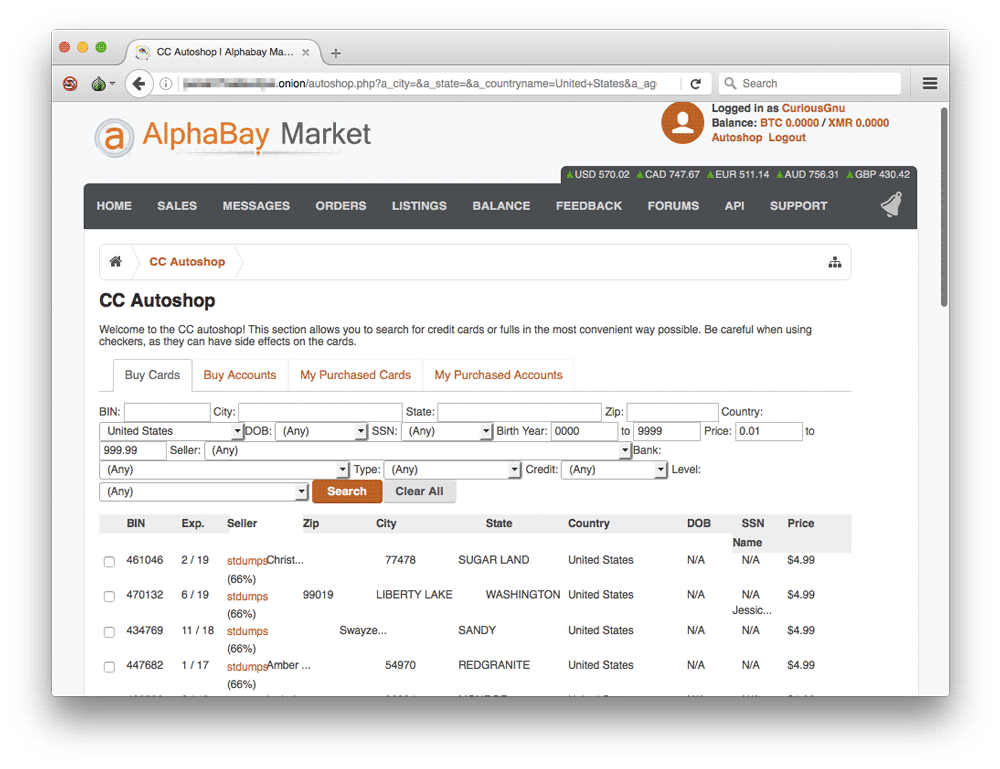

The AlphaBay Market has a feature called CC Autoshop that allows potential buyers to search a database of all the stolen credit cards that are offered by different sellers. For example, it’s possible to only search for cards from a particular city. That’s important for criminals who try to circumvent anti-fraud measures by using stolen cards from the same region or state in which they are located. But it also means that the sellers have to reveal information that we can analyze.

First, I downloaded the entire CC Autoshop as HTML files before I used the Python library Beautiful Soup to extract the information. I got 42,497 U.S. credit cards which were offered on September 1st, 2016 as a result. The total value of is $324,941 with an average price of $7.65 per card. Some cards (1025) even include the Social Security number of the owner. Considering that nearly 13 million Americans are victims of identity fraud each year, this number almost seems insignificant, but it can nevertheless cause millions of dollars in damages. What I find particularly concerning is that anyone can purchase these cards after doing basic internet research.

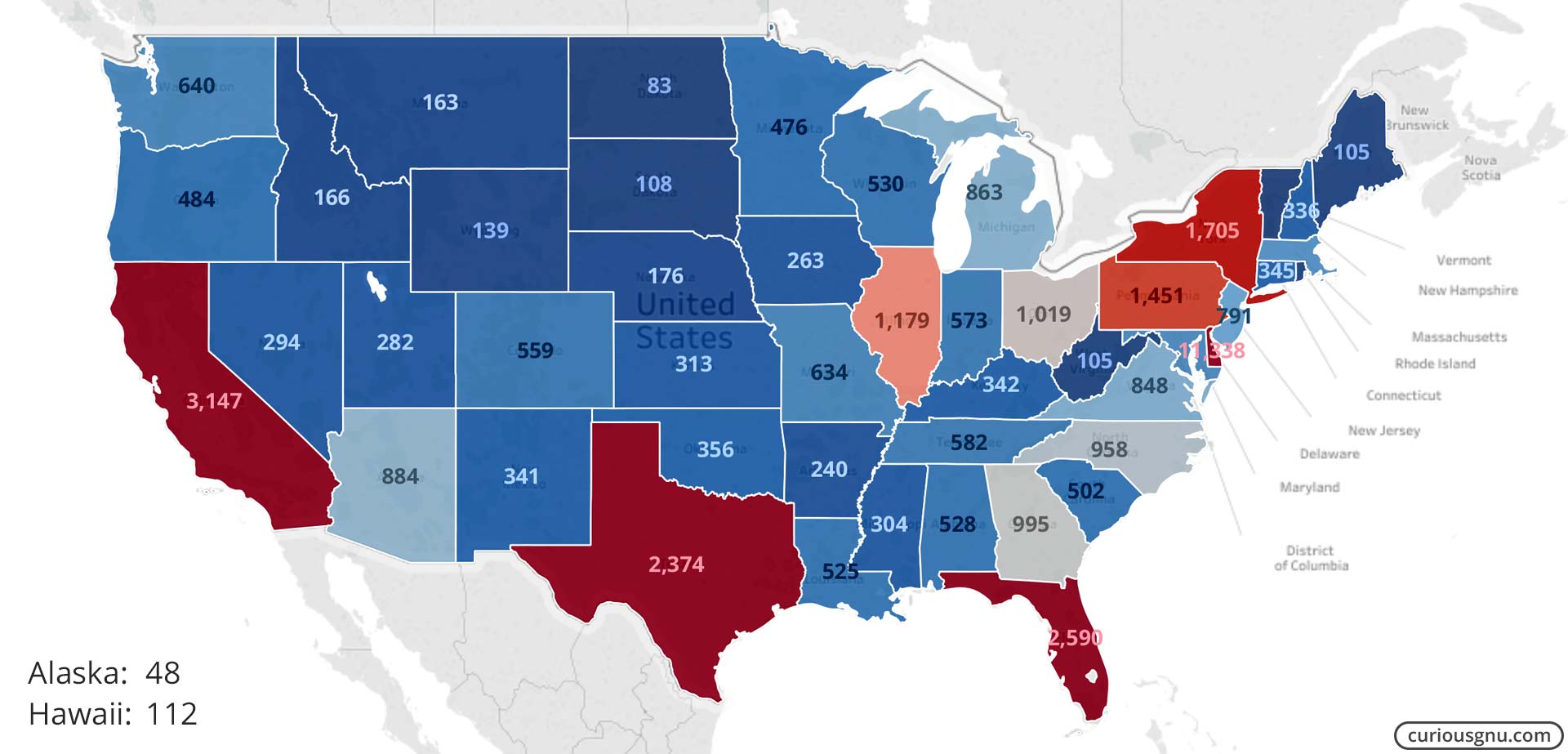

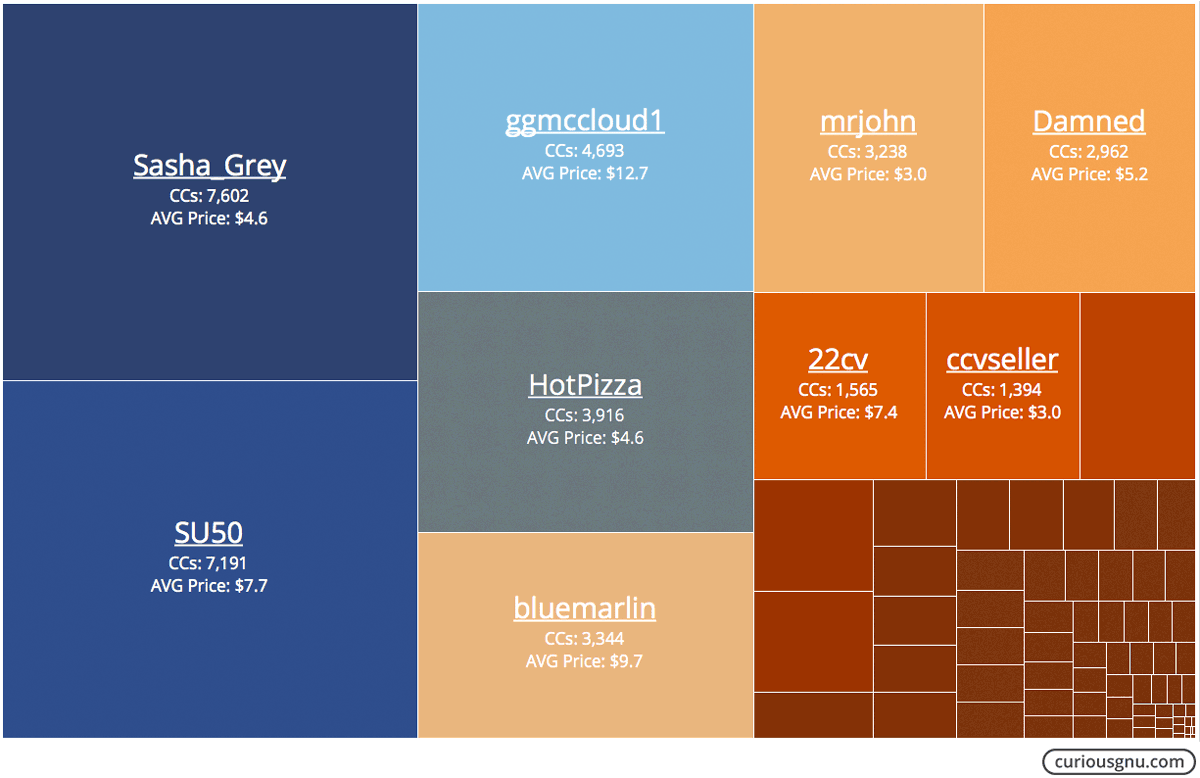

The map above shows how many cards from each state are offered on the AlphaBay Market. It is suprising that 27% (11,338) of them are from Delaware and are sold by only two major sellers (Sasha_Grey and HotPizza). Unfortunately, I can only speculate about the reasons for this. Are those just company credit cards or does it suggest a local card breach? The following graph is an overview of all active AlphaBay CC vendors based on the total number of cards they offer.

If you would like to check whether your credit card is in the dataset, you can either download it as a CSV file or use the following site to search the database: www.curiousgnu.com/assets/tools/cc.html

How to scrape Darknet sites?

Scraping hidden services in the Tor network (Darknet sites) is very similar to regular web scraping with the exception that most hidden services don’t use JSON APIs, meaning that you have to extract the information from an HTML file. My preferred method is first to download all pages as HTML files before using Python and Beautiful Soup to extract the information I need. The following steps should work on OS X and Linux systems.

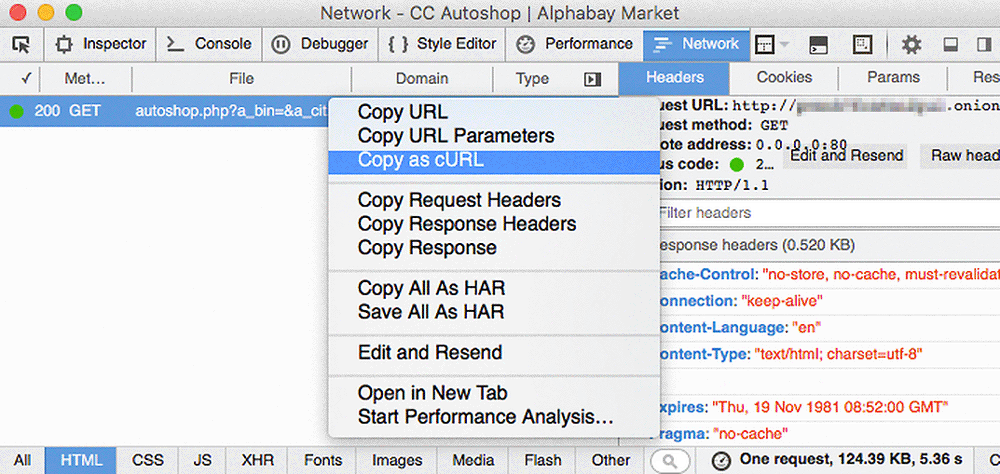

- Visit the site you want to scrape in the Tor Browser.

- Open the Web Console (Right Click > Inspect Element).

- Go to the Network Tab.

- Right click on the main GET request (see Domain column) and select Copy as cURL to copy the curl command to replicate the request.

- Do not close the Tor browser.

- Before you can run the curl command in the Terminal, add the following options to the end of it ’–socks5-hostname 127.0.0.1:9150 –output o.html’. It tells curl to use the Tor client and save the output to o.html.

- In case you want to download multiple pages, you can use the bash for loop like this ‘for i in {1..10}; do curl […] page=${i} –output ${i}.html; done’.

- Follow the Beautiful Soup documentation to extract the need information from the saved HTML files.

If you have questions or concerns, feel free to write me an email.